The purpose of this project was to build on our previous forays into the world of the GPU by working with a variety of common graphics topics - noise, displacement maps, tessellation shaders, and full-screen post-processing effects.

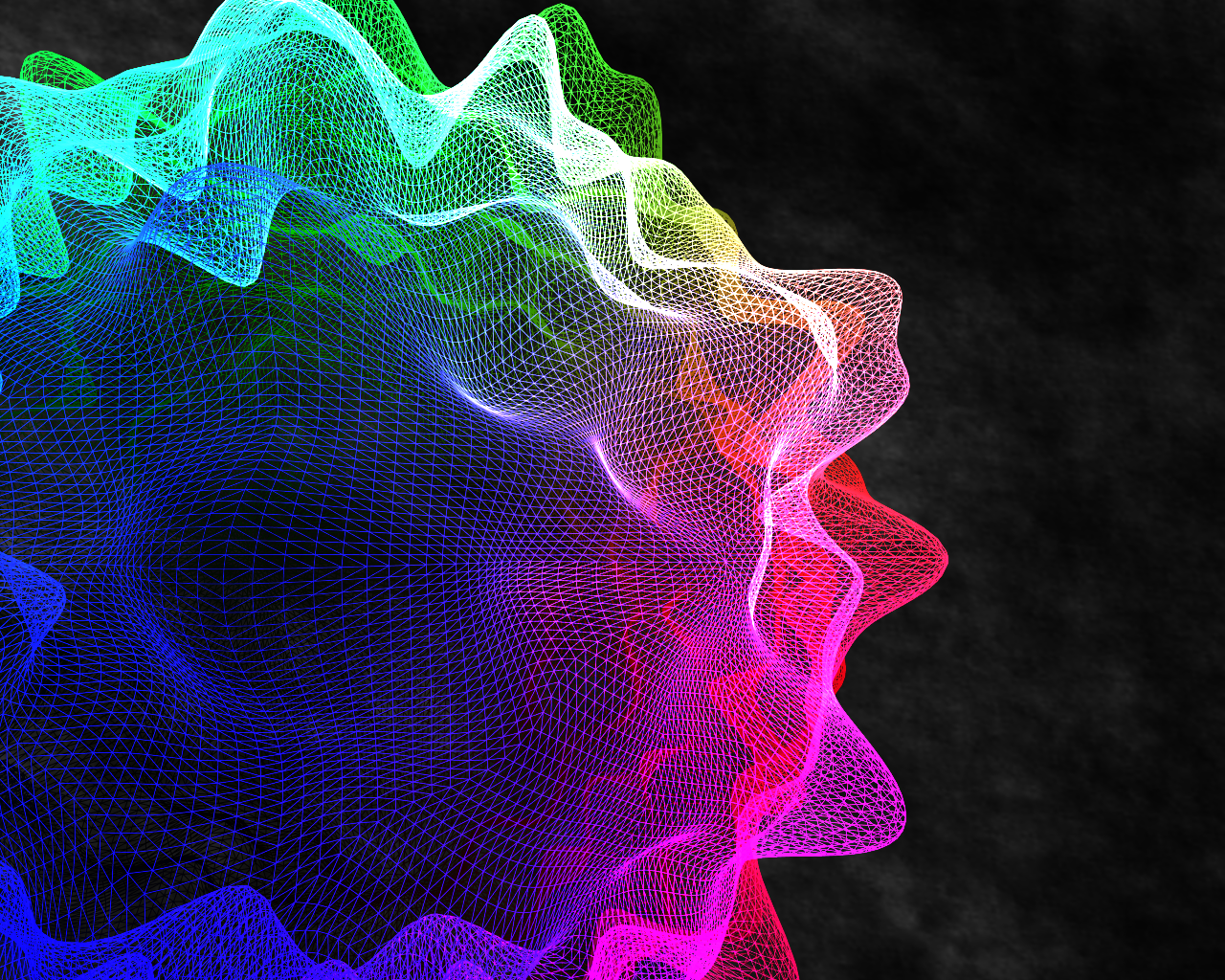

We start with an icosahedron, a 20-sided approximation to a sphere. We use the GPU to tessellate this to a much finer density. To create a sphere, we would re-position all of these new vertices at a distance from the center of the icosahedron equal to the radius of the desired sphere. Here, we want something more interesting, so we calculate the desired position using 4D simplex noise. If we then color our vertices based on their position around the icosahedron's center, we get a picture like the one below. (Note that the 2D noise pattern visible in the background is a 2D slice of the 4D noise used for the vertex displacement.)

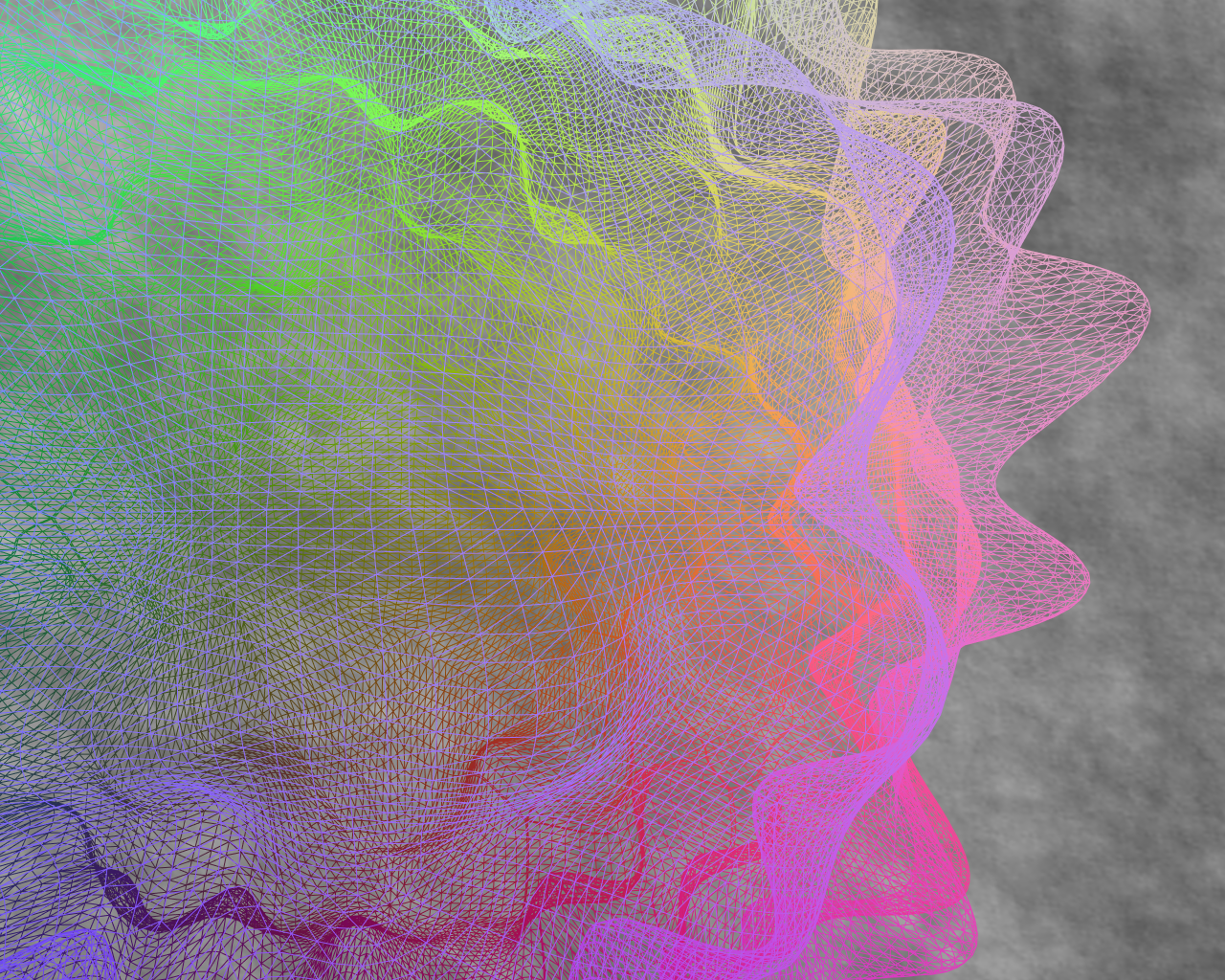

If you look closely at the above image, you'll notice (especially near the left side) that the tessellation factor for the icosahedron is not constant across its surface. The tessellation factor is dependent upon the distance of the edge from the camera's eye point, and is carefully calculated such that the resultant mesh is water-tight. The last piece we needed to implement was a full-screen post-processing shader, and for this, I decided to implement a simple depth-of-field shader. The fragment shader for the icosahedron calculates per-fragment blur amounts (also based on distance between the icosahedron and the camera's eye point), and stores them in the alpha channel of the output color. A blurred version of the icosahedron is generated (using a separable Gaussian blur filter, implemented in GLSL). Finally, the original and blurred versions of the rendered icosahedron are interpolated together (based on the blend factor). By combining this with the proper alpha blending settings, we get the image below.