Visualizations are one of the most important tools for exploring, understanding, and conveying facts about data. However, the rapidly increasing volume of data often exceeds our capabilities to digest and interpret it, even with sophisticated visualizations. Traditional OLAP queries offer high-level summaries about the data but cannot reveal more meaningful insights. On the other hand, complex analytics tasks, such as machine learning (ML) and advanced statistics, can help to uncover hidden signals.

Unfortunately, these techniques are not magical tools that can miraculously produce incredible insights on their own; instead, they must be guided by the user to unfold their full potential. For example, even the best ML algorithms are doomed to fail without proper feature selection, but the process of finding these features is often the result of iterative trial-and-error, where a domain expert tests different subsets of features until finding those that work best.

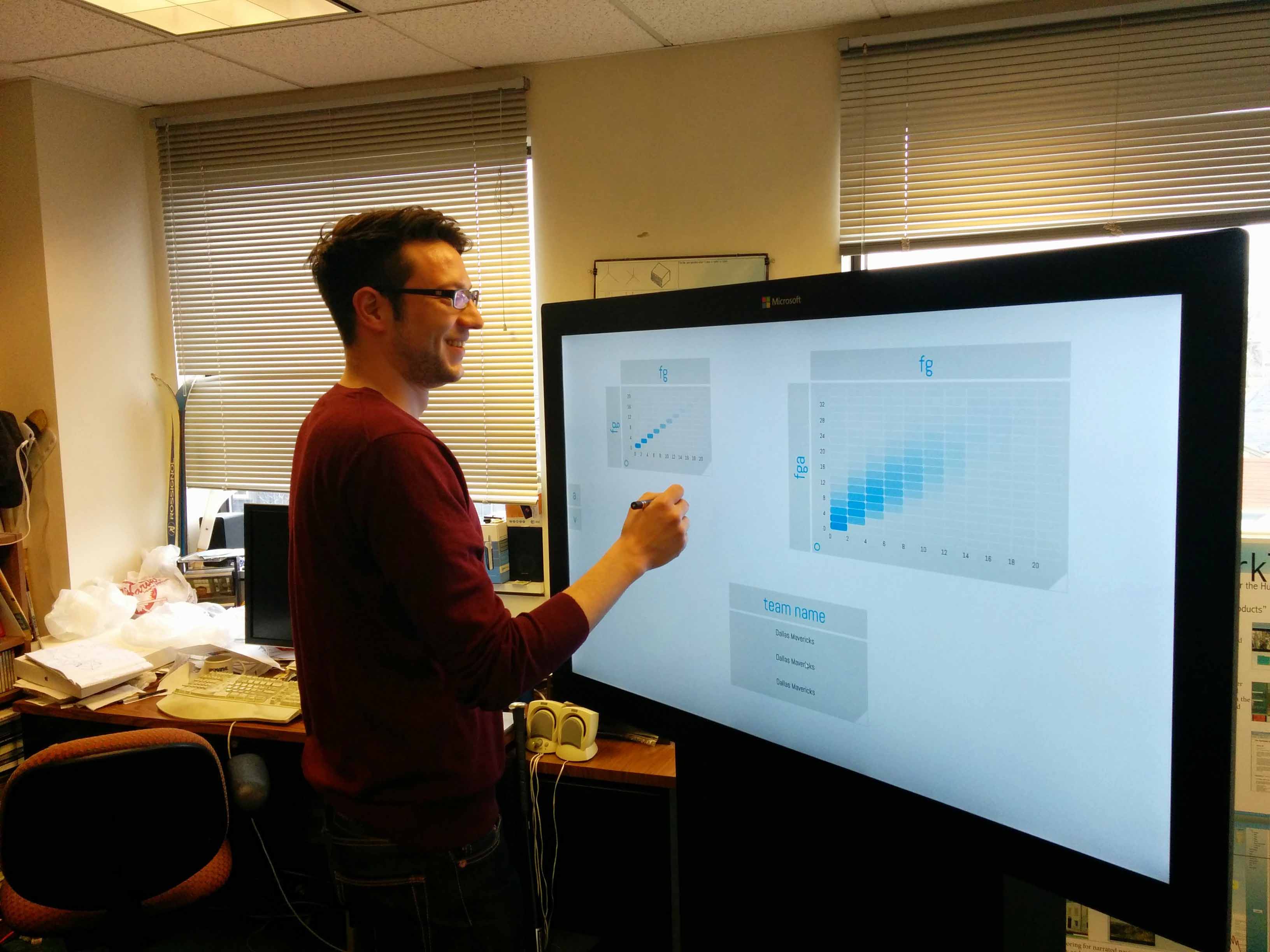

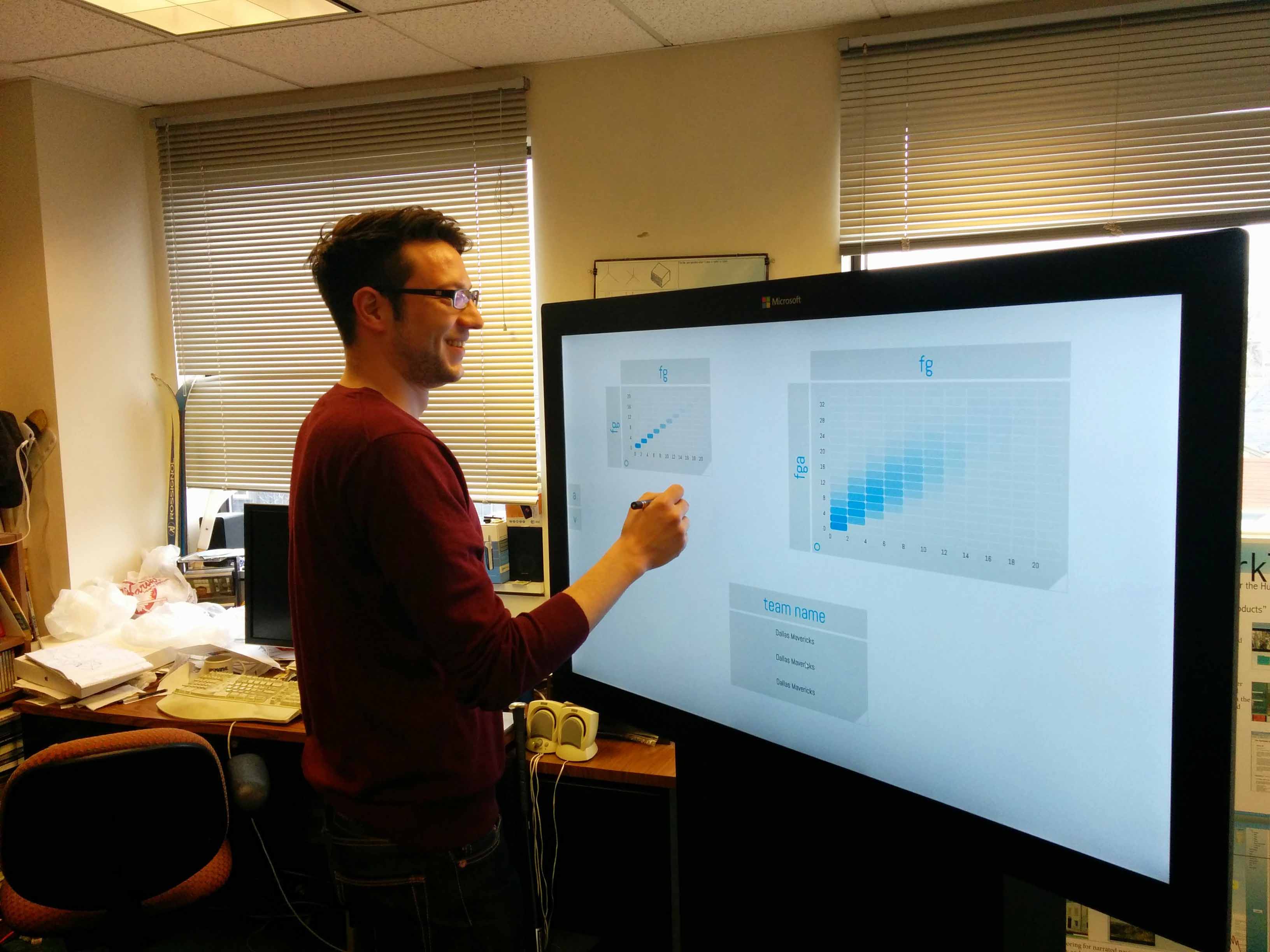

We envision a new system called HILDE (Human-In-the Loop Data Exploration) that helps users to perform complex analytical tasks including ML using an easy-to-use pen-and-touch interface (see picture). We will build on two existing systems which you might already know from the Data Science class, PanoramicData and Tupleware. PanoramicData is a visual front-end to SQL that allows users to rapidly search through datasets using visual queries constructed by pen-and-touch manipulation. For HILDE, we are extending PanoramicData's functionality to include sophisticated ML and statistical operators. Tupleware is a new high-performance distributed analytics framework designed at Brown to efficiently support complex analytics tasks. Tupleware leverages code generation in order to improve the performance of complex CPU-intensive analytics tasks. Over the next months, we will bring these two systems together to build a first version of HILDE for a particular medical use case.

Yet, to make HILDE really work we need to do way more and that is where you come in. First, we need to extend the functionality and available operations (e.g., K-Means, SVMs etc) of HILDE to work with other use cases. This is crucial and will be an easy way to get started with this research project. In addition, there are many open research challenges from exploring new/better visualizations for interactive ML or developing system techniques to make ML more interactive across a spectrum of algorithms. Furthermore, often interactive speeds are only possible using approximation techniques (e.g., visual approximation techniques, incremental result propagation) for which we require new ways to quantify the uncertainty.

For next fall, we are looking for 3-4 people, who help us build a first usable version of HILDE. You will work in a team together with graduate students and have regular meetings with faculty members from the DB group. This is a hands-on project to get involved into systems and data science research and has high potential to lead to a top-tier conference publication.

If you are interested please send your CV and transcript to alexander_galakatos@brown.edu as well as mention what aspect of the system you are most interested in and your level of confidence with C++, Python, C#, Database Internals (indexes, joins, etc), LLVM, as well as front-end development is.