Telecollaboration: Beyond Memex and NLS

|

|

|

| |

|

|

|

|

|

| |

|

|

In 1968 at the FJCC (Fall Joint Computer Conference), Douglas Engelbart (3) demonstrated the NLS/Augment system. Later called "The Mother of All Demos" by Andy van Dam, this real-time, interactive telecollaboration with his lab at SRI (Stanford Research Institute) paved the way for human-computer interaction research. Sections of it are currently on display in the Exhibit on The Information Age at the Smithsonian Museum of American History.

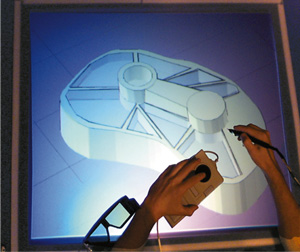

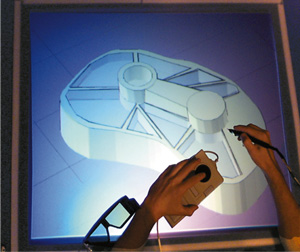

In 1997 the Graphics and Visualization STC is building a new vision based upon the foundation laid by Bush and Engelbart. Whereas Bush envisioned scholarly interaction based on hypertextually linked microfilm and Engelbart simply demonstrated distant collaboration, we seek to provide an end-to-end, real-time, integrated system that takes a product from first rough design sketches through parametric modeling to final production. In addition, we want this system to provide a wide-range, human-centered VR environment in which the participants experience each other as naturally as if they were in the same physical environment.

We were also encouraged by successful linking of the Alpha_1 CAD/CAM modeling system (7) in Utah with the PixelPlanes 5 (8) graphics system at UNC.

In addition, we needed significantly better methods of capturing dynamically changing, large-scale scenes.

Finally, we were not able to fully integrate the creation of a product from rough sketch to final production. Even though HiBall and Head Mounted Display were the result of telecollaboration, they were not designed in a collaborative setting. They were designed primarily at UNC and the plans were shipped to Utah where the final product was modeled and manufactured using the Alpha_1 system.

To capture entire dynamically-changing scenes we are addressing both the hardware issues with the wide field-of-view camera cluster (10) project, and the software issues with the structured-light scene acquisition (11) project.

To create the end-to-end product design and manufacture system, we are integrating Jot, (12) the current incarnation of Sketch, (13) with VRAPP to provide an integrated connection with Utah's Alpha_1 modeling system.

In addition, we will pursue our long-term goals in two particular aspects: improving our existing telecollaboration infrastructure, and collaborating to build products or artifacts. In the former we learn by building a working system, in the latter we learn by actually collaborating on a real product as a driving application for testing and improving our infrastructure.

To follow our vision in the short term:

It was hosted by Andy van Dam and the featured speakers included: Tim Berners-Lee, Douglas Engelbart, Robert Kahn, Alan Kay, Michael Lesk, Nicholas Negroponte, Ted Nelson, Raj Reddy, and Lee Sproull. In March, 1996, ACM published an article on the

Symposium and reprinted "As We May Think" in Interactions.

(2) For the Bush Symposium Paul Kahn of Dynamic Diagrams created an

animation,

which may be downloaded, of the Memex.

(3) Background on the

1968 FJCC demo,

including bibliographic references and links to Engelbart's Bootstrap Institute.

(4)

HiBall project page

(5)

Head-Mounted Display project page

(6)

VRAPP project page

(7)

Alpha_1 Research System project page

(8)

Piexl-Planes 5 home page

(9)

Ghost-locking project page

(10)

Wide Field of View Camera Cluster project page

(11)

Structured Light project page

(12)

Jot project page

(13)

Sketch - Foundation project page and

Sketch - Two-Handed Input project page

(14)

Beyond the Plane: Spatial Hypertext in a Virtual Reality World

| Home | Research | Outreach | Televideo | Admin | Education |